Irvine, California, February 24 2023 SimInsights has successfully completed the integration of GPT3.5 into HyperSkill to boost the accuracy of conversational AI powered immersive simulations.

In 2017, with R&D funding from the National Science Foundation SBIR program, SimInsights began researching the role of voice based interactions within immersive learning environments. Initially, the researchers used Amazon Web Services (AWS) provided API’s (Lex and Polly) to add voice to the simulations. It was immediately evident that voice added to the feeling of immersion and ease of use. The research project also investigated the benefits of immersive learning and gathered data from 118 participants in a randomized controlled trial (RCT). Findings were published in a peer reviewed journal and are available here.

Since then, the team has continued to conduct cutting edge research as AI technologies advanced. Investigation of GPT3 began in 2020 and showed that even though the model performed well with even a small number of samples, the language model BERT delivered higher accuracy. With GPT3.5, it is finally possible to have high accuracy with 10x fewer sample utterances, which makes it possible to integrate it within HyperSkill without the need for technical skills involved in transfer-learning. This integration will finally enable instructional designers to create dialogue-rich scenarios without learning technical skills.

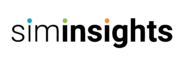

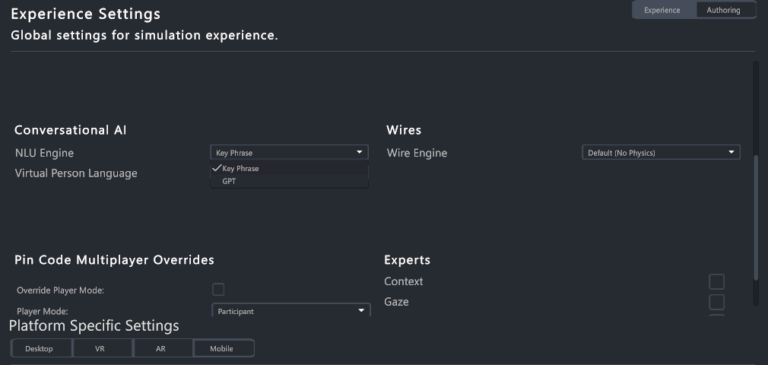

Given that GPT3.5 is a paid service, this AI model is only currently available in the enterprise and educational tiers of HyperSkill. HyperSkill enables instructional designers and subject matter experts to use generative AI technologies based on a no-code authoring approach instead of a code based approach. Selecting chatGPT or GPT4 is as simple as selecting from a dropdown as show in the screenshot below.

Once the author makes this selection, the AI model is activated and starts listening to user’s speech and starts to generate responses. To learn more, please contact us.