3D Immersive Interactive and Intelligent Training for Clinicians

3D Immersive Training

The immersive training provides users with ideal verbal responses to challenging situations through the real-time feedback panel.

Objective

Our aim was to evaluate the usability and performance of the platform as compared to in-person training.

Overview

This project addresses communication and team training through the development of a set of virtual simulations using the HyperSkill platform developed by SimInsights. HyperSkill provides the capability to replicate/simulate the clinical environment within the virtual reality space, without the need to write code. Furthermore, the immersive training provides users with ideal verbal responses to challenging situations through the real-time feedback panel.

Challenges

- Communication and teamwork failures between healthcare team members are responsible for up to 70% of medical errors because members of healthcare teams come from many different disciplines and isolated education and training programs. It is proven that training in communication and teamwork skills aids in improving teamwork, clinical performance and patient outcomes. Healthcare team training is effective for a variety of healthcare outcomes, including trainees’ perceptions of the usefulness of team training, acquisition of knowledge and skills, demonstration of trained knowledge and skills on the job, and patient and organizational outcomes.

- Traditional communication and team training sessions have consisted of classroom-based didactic presentations and/or resource-intense, immersive simulator-based programs requiring in person attendance and facilitated debriefing. These methods have various logistic challenges such as assembling individual team members together to train in person. Furthermore, there are limited opportunities to apply newly acquired skills within relevant contexts, repeat practice and feedback, and follow-up to assess skills acquisition and retention.

Key Features

- Virtual Reality Simulation

- Conversational AI

- Deep Learning

- Dialog Systems

- Asynchronous Training

- Communication Skill Training

The Solution: Immersive 3D simulations with Conversational AI authored using HyperSkill

Authoring with HyperSkill

SimInsights used existing recorded media to create new storyboards and flowcharts, which were implemented using HyperSkill to create three immersive 3D simulations. The clinical environment was replicated in the VR space by visiting a UCLA clinic and capturing reference images using a 360 camera which were provided as references to 3D artists. Additionally, a feedback panel was developed to debrief the learners by providing ideal responses, scoring learner responses, and measuring their confidence.

Natural Language Processing

For each of the three scenarios, Natural Language Processing based intent detection classifiers were trained to map user speech to fixed utterance labels for each scenario. The transfer learning capabilities of the Nvidia TAO toolkit were used to fine-tune a pre-trained Bidirectional Encoder Representations from Transformers (BERT) model for intent classification.

Measurable Benefits

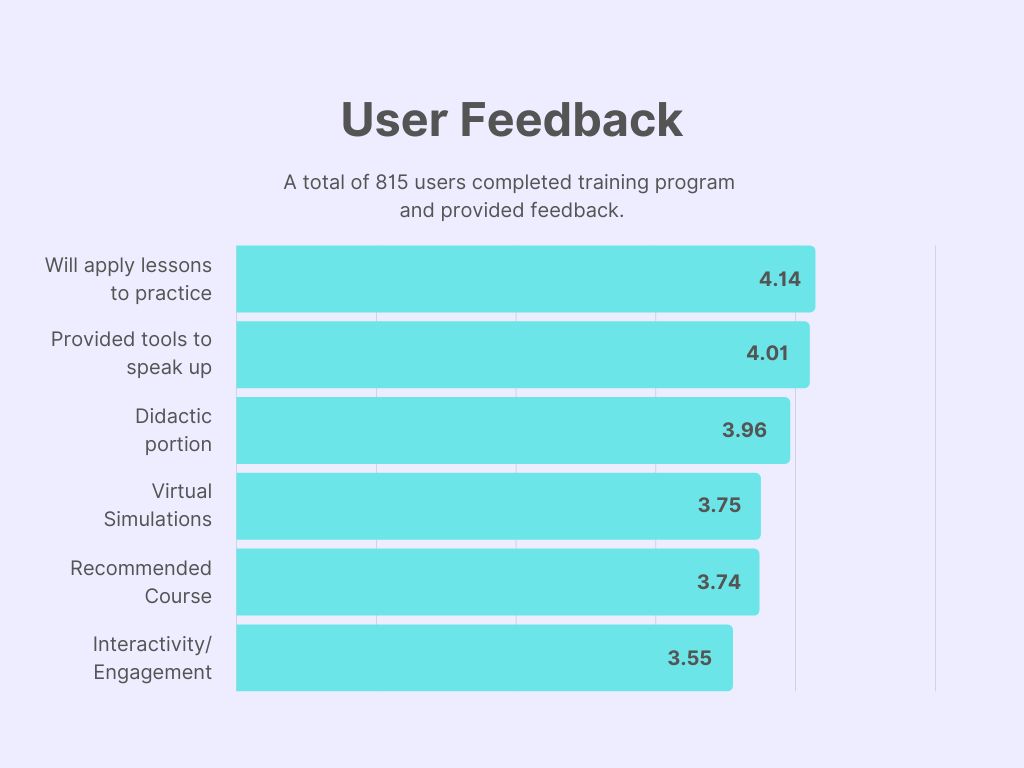

A total of 815 users completed the training program and provided feedback using the survey questions. The users provided feedback on different parameters of the training software, as shown in the chart. Based on the performance metrics, it can be seen that the majority of learners felt that the training program provided them with the necessary tools to speak up and that they will be able to apply the lessons in practice.

- 1. The user feedback shows that such training is perceived to be highly innovative and engaging by the clinical workforce, compared with traditional training.

- 2. The project demonstrates the feasibility of using no-code software for dramatically reducing the cost and time required for creating such innovative training modules

Testimonials

“I now realize why my role as a chaperone matters”

“…I will be a better manager after attending this training”

“Being able to active in the scenarios, although I was nervous… allows me to know what to do if this were to happen in a clinical setting.”

Conferences

The above-mentioned case-study was accepted at various conferences, where representatives of UCLA health or SimInsights presented the findings.

7th International XR Conference 2022

Date: 27-29 April 2020

Title: 3D Immersive, Interactive and Intelligent Training for Clinicians

Presenter: Rajesh Jha, CEO, SimInsights, Inc.

7th International XR Conference 2022

Date: 27-29 April 2020

Title: 3D Immersive, Interactive and Intelligent Training for Clinicians

Presenter: Rajesh Jha, CEO, SimInsights, Inc.

ICCH 2021 International Conference on Communication in Healthcare

Date: October 17-20, 2021

Title: Simulation Training to Empower Medical Chaperones in Speaking Up for Patient Safety

Presenters

Yue Ming Huang, EdD, MHS

UCLA Health

Miguel Drayton, MFA, MS

UCLA Health